This is a paraphrasing of a talk I gave at the first ever Not My Circus AI lightning talks event.

I’ve seen a lot of organisations struggle with AI tool adoption. Not because the tools aren’t good, but because they’re approaching it like a technology rollout instead of a change programme.

The mistake most organisations make

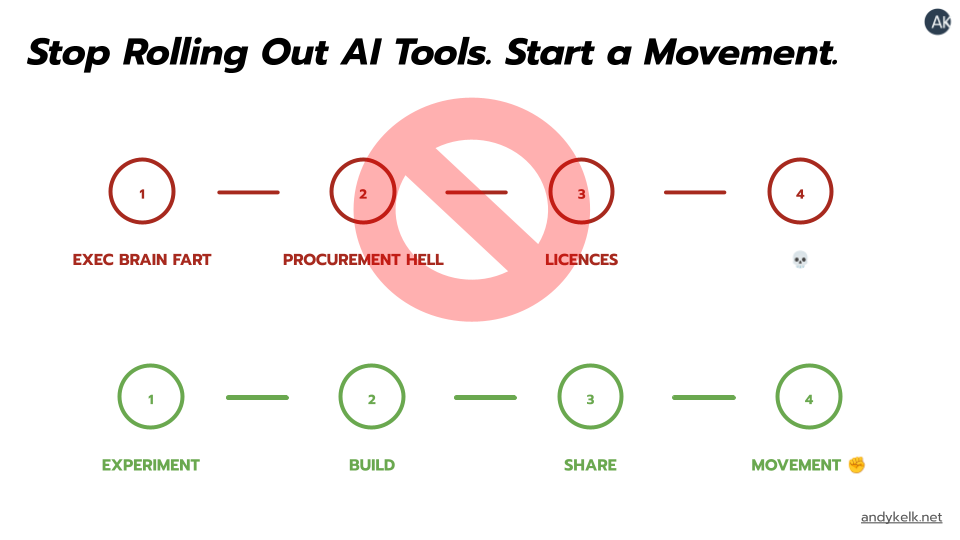

The pattern is familiar: an exec gets excited about AI, makes a proclamation, procurement does their thing, licences get handed out, there’s a Slack announcement… and then tumbleweed. Or worse, active resistance.

The core mistake is treating this as a technology problem (“build it and they will come”) when it’s actually a people problem. It needs a change management approach and a different playbook.

My path through AI-assisted coding

As with a lot of teams, the first AI-assisted coding we used was GitHub Copilot in its autocomplete form - that’s just what was available at the time. People found it useful but not transformative. It was a nice productivity boost: finish your lines faster; write boilerplate quicker. But it didn’t fundamentally change how anyone worked.

Then agent-based tools arrived - Copilot agent mode, Claude Code, Q developer. These tools actually are transformative because they change the work from writing lines of code to shaping solutions.

I have seen two reactions at this point: either that this is another incremental improvement, or that it’s a threat to people’s job.

If people see agent mode as just “a better autocomplete,” they’ll dismiss it. You need to build the understanding that this is a whole new way of working. You’re not just getting autocomplete, you’re collaborating with something that can scaffold entire features, refactor codebases, and translate designs into code.

If people see agent mode as a threat to their livelihood, you need to understand why. My take is that it’s about identity. “Am I still a real engineer if the AI writes the code?” “What’s my value if the machine can do the implementation?”

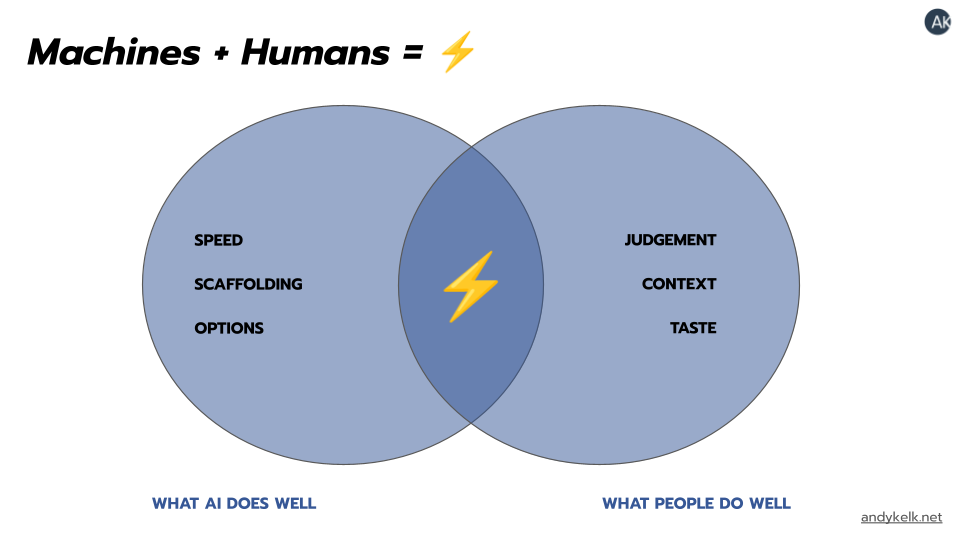

This all comes down to one thing: human judgement.

The machine can generate code, but it can’t make the architectural decisions, understand the business context, or weigh the trade-offs between technical debt and delivery speed. That’s still you. That’s still us.

If you try to roll out agent tools to a few teams without guidance and just let them get on with it, you’ll find that you get a lot of apathy or a lot of pushback. A common reaction is for people to feel like we are signalling that their skills are obsolete.

Permission to experiment

A lot of industry figures will come out with great proclamations about how agentic coding will change the shape of the teams of the future. The reality is that nobody really knows right now. We’re all guessing, we’re all experimenting, we’re all trying things and seeing what works.

You need to see how these tools work in your context. Give small groups explicit permission to experiment with agent-based tools and report back. We didn’t mandate that people did this, we just said “try this, see what works, tell us what you learn.”

That’s how we got real feedback. Some of it was positive, some was negative, but all of it was useful. And it was coming from the team, not from me or the exec.

Real value comes from AI amplifying the judgements that engineers are making. The AI can generate ten different approaches to a problem quickly, and it gives the engineer the opportunity to evaluate them, pick the right one, and adapt it. That’s human judgement. That’s what makes you valuable.

A quick side note on governance, because this often comes up. Often some of the pushback you get might mention the word “slop”. Yes, an AI agent without guardrails may well produce unmaintainable code. But once we’ve been clear on the framing of the transformation (we’re not replacing you) then you can have conversations that are less defensive and more practical. Yes, you still need guardrails: security reviews, coding standards, testing; but make sure they are safeguards not used as a way to block adoption.

Real work beats demos every time

At some point, we had to move past experiments and build things that actually mattered. We needed to build things that would actually make our lives easier using AI.

One team built a tool that takes design files and converts them into production code using existing component libraries. That was real value, created by the team, for the team.

Of course they still reviewed what came out and made decisions about what was good enough, and what needed rework. The AI gave them a starting point; their judgement made it production-ready.

We also ran a two-day all-in hack event across all departments: product, design, engineering, marketing, customer service. The brief was simple: build something with AI that makes your day better. We had adoption from people building and people coming up with the pain points. And because it was cross-functional, we started seeing AI applications beyond just code - using agent builders, low code tools, and vendor solutions. And some of those solutions were ready to go into production.

Bring in external help

We were also lucky enough to bring in partners to help with hackathons, talks, and other perspectives. When you are deep in the weeds of the day-to-day and in your context, hearing from external speakers about how they’re using these tools often lands differently than hearing it internally. Outside voices help challenge assumptions.

Beyond engineering

If you only focus on engineering, you’re missing big pieces of the lifecycle. We built an agent to help product managers write and review initiative canvases. We showed that this wasn’t just about code but about the whole product development process.

In my experience (and I’ll caveat this heavily), I’ve noticed that designers and UX teams can sometimes be more resistant to AI tooling. I think I understand why: the identity of creative professionals is even more tied to the craft than an engineers’. The idea that AI could generate designs feels like an existential threat.

This is another case where we need clarity on where humans get involved in the process and where machines can do the work. An AI can create a great looking design, but machines don’t have taste or judgement. They may make you think they do, but they don’t. They can’t tell you whether it’s the right solution for your users, your business, and your constraints.

The designer’s value is not in pushing pixels but empathy and judgement about what works and why. The AI can generate options; you decide which one is right.

The key is making sure that people - designers, engineers, anyone - see the machine as an aid to their judgement, not a replacement for it. And that they continue to apply critical thinking as that’s what makes them valuable.

Back your change agents

In my experience, earlier career engineers are often more open to AI adoption. They don’t have the same attachment to “how we’ve always done things.” If AI makes them more productive, they’re in. They are learning that their value isn’t in memorising syntax or knowing every API by heart but in their ability to solve problems.

Creating a change collective - a group of champions from across the organisation at different levels, but with a strong representation from junior and mid-level folks - can really help provide leadership to the rest of the organisation. Giving them space to experiment, to share what they learned and to influence the rest of the team can be really powerful.

My one thing: framing

If you can only do one thing differently, think about how you’re framing AI. Understand where resistance is coming from. Do people see it as another incremental improvement and are ignoring it, or are they seeing it as an existential threat?

How you position AI - as a transformative way of working that needs as much (if not more!) human judgement as before - will determine your success.

Everything else - the hack days, the change collective, the pilots - builds on that foundation.

Where now?

This space is moving too fast for anyone to have all the answers. What worked for us six months ago might not work today. And it depends so much on your context.

But what I do know is that if you treat AI tool adoption as a change programme rather than a technology rollout, you’ll get further.

- Frame it properly - agent tools are transformative and rely on human judgement

- Give people permission to experiment

- Build real things

- Back your change agents

We’re not being replaced - we’re being augmented. The people who figure out how to exercise judgement in an AI-augmented world are going to be incredibly valuable.